Various processes in natural sciences, such as the geometric shape of shores, rocks, plants, waves, organism trajectories, atmospheric flows and other phenomena that seem to present a higher level of complexity, may reveal self-similar or self-affine patterns under different resolutions, and although this structure may initially seem complex, it is actually a source of simplicity. Thus, a seemingly complex system can be explained by a relatively low set of parameters. For instance, research over the years has shown that the temporal variations of EEG (electroencephalography) signals exhibit long-range correlations over many time scales, indicating the presence of self-invariant and self-similar structures. Such structures can be captured with non-linear analysis, and with compression methods, such as the Lempel-Ziv complexity algorithm (LZc) [1].

The LZc algorithm looks for repeating patterns in the data, and instead of describing the data using all initial information, which may seem rather complex, it summarizes it using only the underlying unique patterns. Thus, each signal can be described using less information and, therefore, appear to be less complex compared to what was initially observed. Although there is not an exact definition of what complexity is, we can say that it is an indicator of how a physical system evolves. Intuitively and by simplifying the problem, changing the nodes, neurons, or variables of a system, altering their coupling function or connectivity, or introducing some kind of noise that can make the signals less predictable, alters the complexity of the system. The question that is rather essential and of much interest to us, yet not fully answered, is the following: how does complexity change in the brain, what does it depend on, and how is it related to consciousness, intelligence, wisdom, and brain disorders?

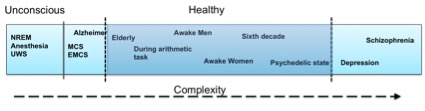

We still do not know exactly how brain complexity works but there have been several scientific studies that tackle the problem in various scenarios. Summarizing some research findings it seems that stroke patients, schizophrenia, and depression patients have higher LZ complexity on both a spontaneous and a cognitive task-related EEG activity compared to age-matched healthy controls (e.g., [1]). However, spontaneous EEG complexity seems to decrease during anesthesia, NREM sleep, as well as in Unresponsive Wakefulness Syndrome (UWS), Minimal Conscious State (MCS) or Emergence from MCS (EMCS) patients, and in Locked-in syndrome (LIS) patients [2]. Also, complexity seems to decrease in schizophrenia, depression and in healthy controls when the participants perform a mental arithmetic task compared to their resting state EEG [1]. Some of these findings are also supported by MEG (magnetoencephalography) studies, e.g., schizophrenia patients seem to have higher LZ complexity compared to healthy controls also in their MEG signals [3], and depressed patients seemed to have higher MEG pre-treatment complexity that decreases after 6 months of pharmacological treatment [4]. Although MEG and EEG refer to two distinct types of brain signals, it seems that their underlying complexity patterns follow a similar behavior. Other MEG studies have revealed decreased complexity in MEG signals in Alzheimer patients compared to age-matched healthy controls [5], increasing complexity until the sixth decade of life in healthy subjects, and decreased complexity after this age, as well as higher complexity in females compared to males [6]. A recent MEG study showed that the complexity is increased during a psychedelic state of consciousness induced using ketamine, LSD, and psilocybin compared to a placebo effect [7]. So, what is happening in the brain that gives way to some cases in which complexity increases whereas in other cases it decreases? Let’s explain some of the cases below.

Let’s begin with the decrease in complexity during the simple arithmetic task, compared to spontaneous EEG activity. Why does complexity decrease in this case? According to [1], a possible explanation is that the decrease in complexity may be due to an increase in synchronization during the mental activity, which typically reflects the state of internal concentration. Thus, the more concentrated, the better the brain is at being organized which results in lower complexity. Regarding schizophrenia and depression increases in complexity during the task and during the spontaneous activity compared to healthy controls, the same paper ([1]) suggests that this happens due to an increase in neuron participation in the information processing for both disorders. Thus, it seems that to perform the same task, schizophrenia and depressive patients require more neurons compared to healthy controls. In the same study it was found that schizophrenia patients had higher complexity compared to depressed ones, the latter of which was found to be closer to the healthy controls. This suggests that information processing in schizophrenia patients may require the participation of more neurons or more connections between them compared to both healthy controls and depressive patients. But does increased complexity always imply deficit? The answer is no.

As previously discussed, several studies have found increasing complexity with age in healthy subjects until the age of sixty and decreasing complexity later on, as well as increased complexity in females compared to males [6]. As reasoned in [8], these findings may be the result of capturing the continuous formation and maturation of the neuronal assemblies, and the development of cortico-cortical connections of neural assemblies with age, which starts decreasing after middle age. According to [6], this rationale coincides with the myelination of cortical white matter development with age, which is involved in the cortico-cortical connections. Specifically, the cortical white matter increases until it reaches a peak at around the fourth decade of life, and then decreases. This behavior has been verified by various brain imaging studies. This explanation doesn’t mean of course that only if there are changes in the white matter there will be changes in the complexity, but rather that changes in the white matter can provoke changes in the cortical complexity. So far there have been no observations on differences in the white matter between males and females, and it is still more difficult to explain the increased complexity observed in females compared to males.

Increased complexity was also found during a psychedelic state induced using ketamine, LSD, and psilocybin [7]. In this case, the increase in complexity seems related to richer, more expansive and diverse scenes compared to normal ones, according to the participants’ reports. The increase in complexity could be explained by an increase in neuron participation and higher connectivity across them due to the increase in the sensory information. Thus, the idea would be that since the environment is perceived as more enhanced and more diverse, more neuronal mechanisms would need to take place to perceive it. The authors of this paper relate their findings with bridging the gap between conscious content and conscious level, in the sense that increases in conscious level correspond to increases in various ranges of conscious content.

Now, let’s see what happens in the cases in which complexity decreases. As discussed previously, complexity decreases in dreaming, NREM, MCS, LIS, and anesthesia-induced stages [2]. The research carried out in this regard used a perturbation-driven protocol to trigger a significant response and measure this response through complexity. Among these stages, the estimated metric of complexity, namely the PCI (perturbation complexity index, based on LZc), behaved the same way depending on whether the loss of consciousness was due to a physiological process or due to a pharmacological intervention. Thus, NREM sleep stages, induced anesthesia, and UWS patients resulted in similar complexity values that were lower than the MCS/EMCS that was lower than the awake healthy controls. The complexity, as estimated in this case, measures both the information content and the integration of the output of the corticothalamic system, as explained by the authors.

So, what should the aim be: high or low brain complexity? Figure 1 presents the evolution of complexity with respect to various states, in an intuitive way. As with everything else in life, it seems that neither too much nor too little complexity in the brain can serve one right on well being, as can be seen from where the healthy part is lying on the complexity spectrum. There is still much room for understanding the whole complexity picture, as there are still missing relationships in the complexity spectrum, but don’t worry… We are working on that! If you are interested in the concept, you can also have a look at our H2020 Luminous project on studying, measuring and modifying consciousness. Keep an eye out for new findings resulting from our work! I hope this post helps you wrap your mind around the complex matter of complexity.

![Figure 1. Intuitive description of the complexity spectrum]()

Figure 1. Intuitive description of the complexity spectrum

![Captura de pantalla 2015-10-22 a las 15.41.46]()

References:

[1]. Li, Yingjie, et al. “Abnormal EEG complexity in patients with schizophrenia and depression.” Clinical Neurophysiology 119.6 (2008): 1232-1241.

[2]. Casali, A. G., Gosseries, O., Rosanova, M., Boly, M., Sarasso, S., Casali, K. R., … & Massimini, M. (2013). A theoretically based index of consciousness independent of sensory processing and behavior. Science translational medicine, 5(198), 198ra105-198ra105.

[3]. Fernández, A., López-Ibor, M. I., Turrero, A., Santos, J. M., Morón, M. D., Hornero, R., … & López-Ibor, J. J. (2011). Lempel–Ziv complexity in schizophrenia: a MEG study. Clinical Neurophysiology, 122(11), 2227-2235.

[4]. Méndez, M. A., Zuluaga, P., Hornero, R., Gómez, C., Escudero, J., Rodríguez-Palancas, A., … & Fernández, A. (2012). Complexity analysis of spontaneous brain activity: effects of depression and antidepressant treatment. Journal of Psychopharmacology, 26(5), 636-643.

[5]. Gómez, C., & Hornero, R. (2010). Entropy and complexity analyses in Alzheimer’s Disease: An MEG study. The open biomedical engineering journal, 4, 223.

[6]. Fernández, A., Zuluaga, P., Abásolo, D., Gómez, C., Serra, A., Méndez, M. A., & Hornero, R. (2012). Brain oscillatory complexity across the life span. Clinical Neurophysiology, 123(11), 2154-2162.

[7]. Schartner, M. M., Carhart-Harris, R. L., Barrett, A. B., Seth, A. K., & Muthukumaraswamy, S. D. (2017). Increased spontaneous MEG signal diversity for psychoactive doses of ketamine, LSD and psilocybin. Scientific Reports, 7.

[8]. Anokhin, A. P., Birbaumer, N., Lutzenberger, W., Nikolaev, A., & Vogel, F. (1996). Age increases brain complexity. Electroencephalography and clinical Neurophysiology, 99(1), 63-68.

![]()